Imagine a world where every digital screen you encounter, from a storefront window to a public kiosk, instantly recognises your preferences and adapts its message just for you. This is the reality of hyper-personalisation at scale, the defining retail and marketing trend of 2025. Moving far beyond basic demographic targeting, this revolution is powered by dynamic displays that leverage artificial intelligence, real-time data, and sophisticated sensors to tailor content spontaneously for each viewer. It represents the convergence of digital signage and one-to-one marketing, achieving a level of relevance previously impossible in public spaces.

The technological foundation for this adaptive display revolution is a seamless integration of hardware and software. Modern displays are equipped with anonymised sensors, such as cameras with computer vision or integrated mobile detection, that can gather contextual cues like approximate age, gender, mood, or even weather conditions in real-time. These inputs are processed by cloud-based AI, which cross-references them with consented first-party data, purchase history, or immediate behavioral intent. In milliseconds, the system selects and renders the most relevant product advertisement, promotional offer, or informational content designed to resonate with that specific moment and person.

This shift transforms passive screens into active conversational interfaces within the physical environment. The result is a monumental increase in engagement and conversion efficiency, as marketing messages cut through the noise with pinpoint accuracy. For businesses, it means maximizing the impact of every single display impression, reducing content waste, and building deeper customer relationships through recognized relevance. For consumers, it delivers a curated, helpful, and intuitively responsive experience. As we advance through 2025, adaptive displays are setting a new standard, proving that true personalization can indeed be achieved at a mass scale.

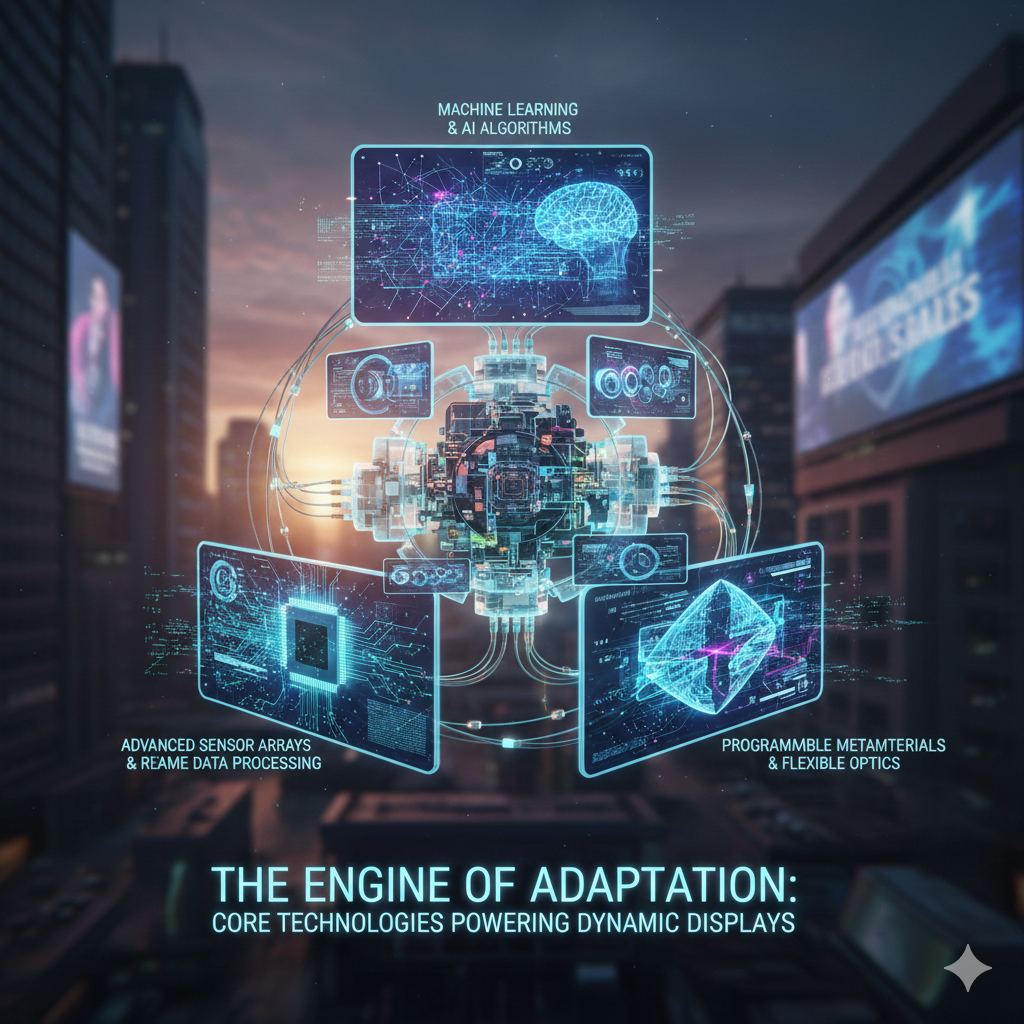

The Engine of Adaptation: Core Technologies Powering Dynamic Displays

The sophisticated responsiveness of a dynamic display is driven by a layered technology stack, each component essential for translating real-world observation into a personalized digital message. At the forefront are the advanced sensor arrays and data inputs that serve as the system’s eyes and ears. Modern displays incorporate a suite of anonymized sensing technologies, including compact cameras with embedded computer vision, Bluetooth or WiFi signal detectors for proximate mobile device identification, and even environmental sensors. These tools gather immediate, contextual data points such as approximate demographic cues, dwell time, group size, current weather, or the time of day. Crucially, this stage focuses on aggregated or anonymized data to respect privacy, creating a contextual snapshot of the moment rather than immediately identifying a specific individual.

This raw data is instantly transmitted via high-speed connectivity to the system’s brain, cloud-based artificial intelligence and machine learning platforms. This is where true adaptation is engineered. Powerful algorithms process the incoming sensor data in real-time, cross-referencing it with consented first-party data from customer relationship management systems, past purchase histories, and real-time behavioral analytics. For instance, the AI might recognize a returning customer via an anonymized mobile ID token, recall their last purchase, and understand they are lingering in the shoe department. This intelligence layer makes the critical micro-second decision about what content will be most relevant, effectively predicting intent and curating the subsequent message.

The decision from the AI activates the third core component, the dynamic content management system and media engine. This is not a simple slideshow but a sophisticated real-time rendering platform. It houses a vast library of pre-approved creative assets, video clips, promotional offers, and copy variants, all tagged with metadata that defines their appropriate use cases. Upon instruction from the AI, the system dynamically assembles the optimal combination of these elements, generating a unique piece of content on the fly. It can adjust imagery, product highlights, promotional text, and even language, ensuring the final output is a bespoke creation for the observed context and predicted preference.

Seamlessly uniting these elements is the critical layer of integrated software and application programming interfaces. This connective tissue allows the sensors, the cloud AI, the content engine, and the physical display hardware to communicate without latency. Robust APIs pull live data from external sources, such as inventory databases, to ensure promoted items are in stock, or from weather services to align messages with conditions. This integration creates a closed-loop system where every action, from a viewer’s engagement to a subsequent purchase, can be fed back into the AI model to refine and improve future content decisions, fostering a cycle of continuous learning and optimization.

Ultimately, the tangible output of this complex technological engine is the advanced display hardware itself, which has evolved far beyond a simple monitor. These are high-brightness, reliable screens designed for constant operation, often with interactive touch or gesture recognition capabilities. Their true advancement lies in their programmability and connectivity, acting as the perfect canvas for the real-time content delivered by the system. Together, this integrated stack of sensors, connectivity, AI, content software, and hardware transforms a static sign into an adaptive interface, capable of delivering the long-promised dream of one-to-one communication in public spaces.

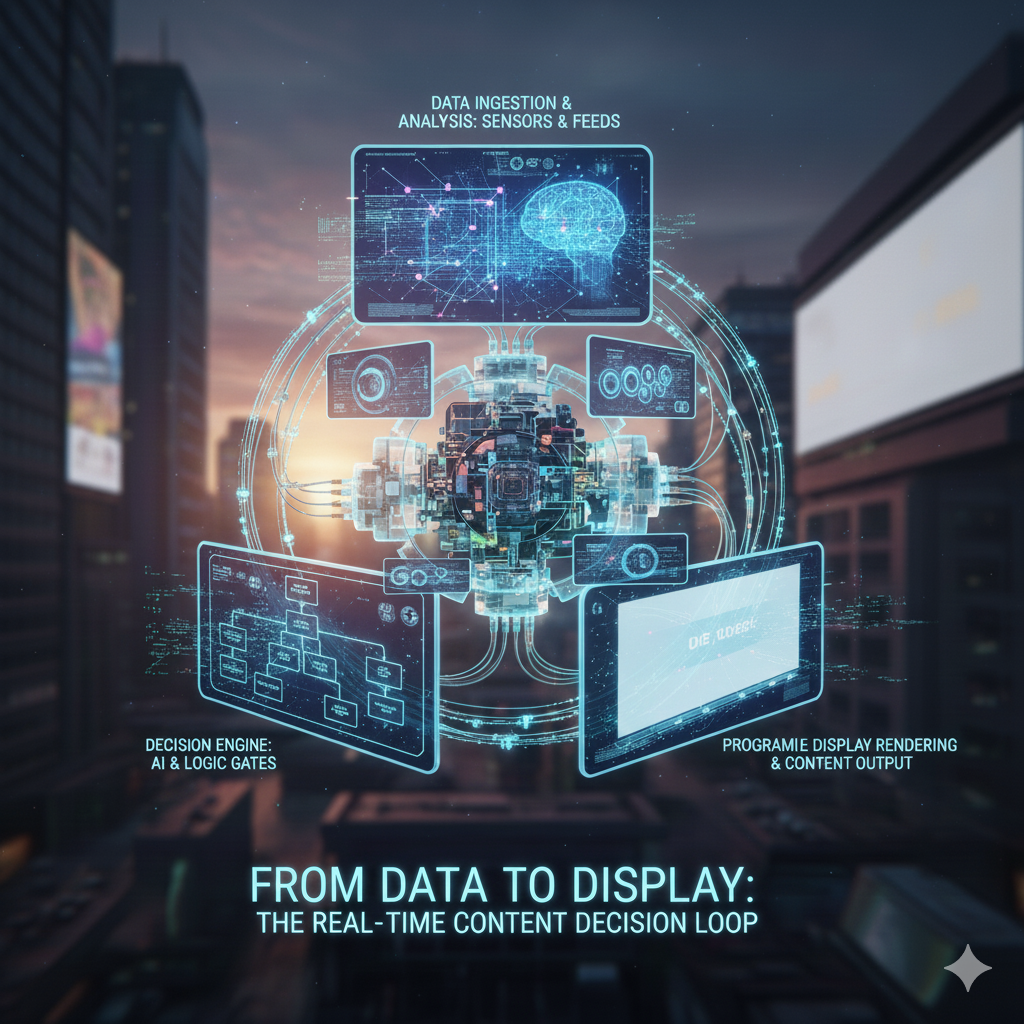

From Data to Display: The Real-Time Content Decision Loop

The real-time content decision loop begins the instant a person enters the detection zone of a dynamic display, initiating a seamless, high-speed process that transforms raw data into a personalized visual message. This continuous cycle is activated by the sensor suite, which captures initial contextual signals. These signals may include an anonymized visual estimate of demographic traits, the detection of a mobile device emitting a previously consented identification token, or simple behavioral cues like dwell time and direction of gaze. This initial data packet, timestamped and encrypted, forms the foundational trigger, signaling the system that a potential engagement opportunity has begun and setting the decision machinery in motion.

This collected data is immediately routed through a secure cloud gateway to the artificial intelligence decision engine, the core of the loop. Here, advanced algorithms perform a lightning-fast analysis, comparing the live input against a vast repository of historical data, pre-defined business rules, and predictive models. The AI cross-references the anonymous viewer signals with consented customer profiles, real-time inventory levels, current promotional calendars, and even external data feeds like local weather or time of day. Its objective is to calculate the highest-probability content outcome, essentially asking what single piece of information or offer would be most relevant and likely to drive a desired action for this specific individual in this exact context.

Within milliseconds, the AI engine reaches a decision and sends a precise instruction set to the dynamic content management system. This instruction does not send a full video file but rather a recipe, calling upon specific modular content assets from a pre-approved library. The system dynamically assembles these assets, which may include a background image, a product video, a tailored headline, a personalized promotional offer, and a call-to-action button. This assembly is not a random selection but a coherent composition based on the AI’s prediction, ensuring all visual and textual elements work in harmony to reflect the inferred intent or preference of the viewer standing before the screen.

The final assembled content is then streamed or pushed to the physical display hardware, completing the loop from data to display. This delivery occurs over high-bandwidth networks with minimal latency, ensuring the personalized content appears fluidly and without noticeable delay to the viewer. The screen refreshes to show a message that feels intuitively crafted for them, whether it’s highlighting a favorite brand, offering a complementary product to a past purchase, or presenting a weather-appropriate item. This moment of recognition and relevance is the critical output of the entire loop, designed to capture attention and foster engagement in a way generic signage cannot.

Crucially, the loop does not end with content delivery. The system immediately enters a feedback and optimization phase. The viewer’s subsequent interaction, be it prolonged dwell time, an interaction via touch, a mobile scan, or even a walk-away, is captured as new data. This performance metric is fed back into the AI engine, reinforcing the learning models about what content strategies work for specific audience segments and contexts. This closed-loop system ensures that every interaction contributes to a smarter, more effective decision process in the future, creating a perpetually optimizing cycle where personalization becomes increasingly accurate and impactful with each use.

Conclusion

The seamless operation of the real-time content decision loop signifies a fundamental shift in how physical spaces communicate, moving from static broadcasting to dynamic, one-to-one interaction. This technology represents the culmination of data intelligence, where every sensor input and every algorithmic prediction is harnessed to serve the singular goal of relevance. By successfully closing the loop between observation, analysis, action, and feedback, these systems transform ordinary touchpoints into valuable conversational moments, ensuring that communication is never wasted but is instead continuously refined and personalized.

This capability, however, firmly anchors the future of marketing and retail in a landscape defined by responsible data stewardship. The power to adapt a message in real-time based on inferred intent carries with it a profound responsibility to protect individual privacy and maintain transparency. The sustainable success of this model depends on a balanced value exchange, where the benefits of hyper-relevant content and streamlined experiences for the consumer are matched by unwavering ethical standards, clear consent frameworks, and robust data security from the organizations that deploy it.

Looking forward, the real-time decision loop is not a static achievement but a scalable foundation. The underlying principle of instantaneous, data-driven personalization will extend beyond digital screens to power adaptive lighting, soundscapes, and even product placement within stores. As artificial intelligence grows more sophisticated and integrated data ecosystems become more holistic, this loop will enable environments that are not merely responsive but truly anticipatory, crafting cohesive and intuitively personalised journeys that redefine the very nature of customer engagement in the physical world.

Frequently Asked Questions

1. How do these systems ensure personal privacy while using sensors and data for personalization?

Reputable systems are designed with privacy-first principles, relying on anonymization and aggregation rather than individual identification. Sensors typically process data like general demographic cues or device presence in real-time without storing personally identifiable images or information. Personalization for known customers is usually triggered only by a consented, anonymized token from a brand’s official mobile app, ensuring the individual has opted into the experience. Strict data governance protocols and compliance with regulations like GDPR are fundamental, focusing on using data to serve relevant content without compromising personal identity.

2. What happens if multiple people are standing in front of the display at the same time?

Advanced systems are engineered to handle group scenarios through sophisticated computer vision and decision logic. The technology can detect multiple viewers and default to a neutral, broadly appealing content loop or employ a strategy that prioritizes the most engaged individual, such as the person looking at the screen longest. Alternatively, it can cycle through different personalized messages in rapid succession or display a general advertisement that incorporates elements relevant to the aggregated demographic mix of the group, ensuring the experience remains functional and engaging for all viewers.

3. Is this technology only viable for large retailers with big budgets?

While early adoption was led by large enterprises, the technology is becoming increasingly modular and accessible. Cloud-based software-as-a-service models and more affordable sensor hardware are lowering entry barriers. Smaller businesses can start with focused implementations, such as a single smart display in a high-traffic area or using simpler triggers like time of day or weather, rather than full individual personalization. This allows them to benefit from dynamic content management and basic contextual adaptation, scaling the sophistication of their personalisation efforts as their needs and capabilities grow.